Why Deep Learning Is Suddenly Changing Your Life

16/10/2019

A 2016 Fortune news piece tells us how decades-old discoveries are now electrifying the computing industry.

Over the past four years, readers have doubtlessly noticed quantum leaps in the quality of a wide range of everyday technologies.

In fact, we are increasingly interacting with our computers by just talking to them, whether it’s Amazon’s Alexa, Apple’s Siri, Microsoft’s Cortana, or the many voice-responsive features of Google. Chinese search giant Baidu says customers have tripled their use of its speech interfaces in the past 18 months.

Machine translation and other forms of language processing have also become far more convincing, with Google, Microsoft, Facebook, and Baidu unveiling new tricks every month.

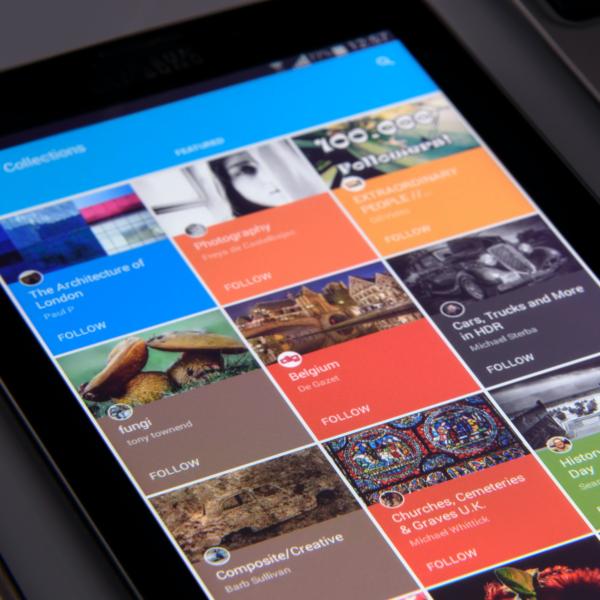

Then there are the advances in image recognition. Think about that. To gather up dog pictures, the app must identify anything from a Chihuahua to a German shepherd and not be tripped up if the pup is upside down or partially obscured, at the right of the frame or the left, in fog or snow, sun or shade. At the same time it needs to exclude wolves and cats. Using pixels alone. How is that possible?

The advances in image recognition extend far beyond cool social apps. Medical startups claim they’ll soon be able to use computers to read X-rays, MRIs, and CT scans more rapidly and accurately than radiologists, to diagnose cancer earlier and less invasively, and to accelerate the search for life-saving pharmaceuticals. Better image recognition is crucial to unleashing improvements in robotics, autonomous drones, and, of course, self-driving cars. Ford, Tesla , Uber, Baidu, and Google parent Alphabet are all testing prototypes of self-piloting vehicles on public roads today.

But what most people don’t realize is that all these breakthroughs are the same breakthrough. They’ve all been made possible by a family of artificial intelligence (AI) techniques popularly known as deep learning, though most scientists still prefer to call them by their original academic designation: deep neural networks.

The most remarkable thing about neural nets is that no human being has programmed a computer to perform any of the stunts described above. In fact, no human could. Programmers have, rather, fed the computer a learning algorithm, exposed it to terabytes of data—hundreds of thousands of images or years’ worth of speech samples—to train it, and have then allowed the computer to figure out for itself how to recognize the desired objects, words, or sentences.

In short, such computers can now teach themselves. “You essentially have software writing software,” says Jen-Hsun Huang, CEO of graphics processing leader Nvidia, which began placing a massive bet on deep learning about five years ago.

That dramatic progress has sparked a burst of activity. Equity funding of AI-focused startups reached an all-time high last quarter of more than $1 billion, according to the CB Insights research firm. Google had two deep-learning projects underway in 2012. Today it is pursuing more than 1,000, according to a spokesperson, in all its major product sectors, including search, Android, Gmail, translation, maps, YouTube, and self-driving cars. IBM’s Watson system used AI, but not deep learning, when it beat two Jeopardy champions in 2011. Now, though, almost all of Watson’s 30 component services have been augmented by deep learning, according to Watson CTO Rob High.

Venture capitalists, who didn’t even know what deep learning was five years ago, today are wary of startups that don’t have it.